Get Started with Dagger Cloud

Introduction

Dagger Cloud provides pipeline visualization, operational insights, and distributed caching for your Daggerized pipelines.

This guide helps you get started with Dagger Cloud. Here are the steps you'll follow:

- Sign up for Dagger Cloud

- Send telemetry to Dagger Cloud

- Visualize pipeline runs with Dagger Cloud

- Synchronize cache volumes with the Dagger Cloud experimental distributed cache (available on the Team plan)

Dagger Cloud includes plans for both individuals and teams. See more information about the plans.

Prerequisites

- You have an understanding of how Dagger works. If not, read the Dagger Quickstart.

- You have a GitHub account (required for Dagger Cloud identity verification). If not, register for a free GitHub account.

- You have a source code repository and a Dagger pipeline that interacts with it. If not, follow the steps in Appendix A to create and populate a GitHub repository with a sample application and Dagger pipeline.

Step 1: Sign up for Dagger Cloud

At the end of this step, you will have signed up for Dagger Cloud and obtained a Dagger Cloud token. If you already have a Dagger Cloud account and token, you may skip this step.

Follow the steps below to sign up for Dagger Cloud, create an organization and obtain a Dagger Cloud token.

-

Sign up for Dagger Cloud by selecting a plan on the Dagger website. Click Continue with GitHub to log in with your GitHub account.

-

After authorizing Dagger Cloud for your GitHub account, you'll create your organization.

Naming your organizationOrganization names may contain alphanumeric characters and dashes and are unique across Dagger Cloud. We recommend using your company name or team name for your organization.

-

Review and select a Dagger Cloud subscription plan.

-

If you selected the Team plan:

-

You will be presented with the option to add teammates to your Dagger Cloud account. This step is optional and not available in the Individual plan.

-

You will then enter your payment information. After your free 14 day trial completes, you will be automatically subscribed to the Dagger Cloud Team plan.

-

-

Click Go to dashboard. The next step walks you through how to send telemetry to Dagger Cloud.

Step 2: Send telemetry to Dagger Cloud

At the end of this step, you will have connected Dagger Cloud with your CI provider or CI tool using your Dagger Cloud token. If you have already connected Dagger Cloud with your CI provider/tool and don't see data yet in your dashboard, skip to the next step.

You can use Dagger Cloud with your CI and for your local development workflows. You instruct your Dagger Engine to connect to Dagger Cloud by referencing your unique Dagger Cloud token. Dagger Cloud creates this token automatically when you sign up.

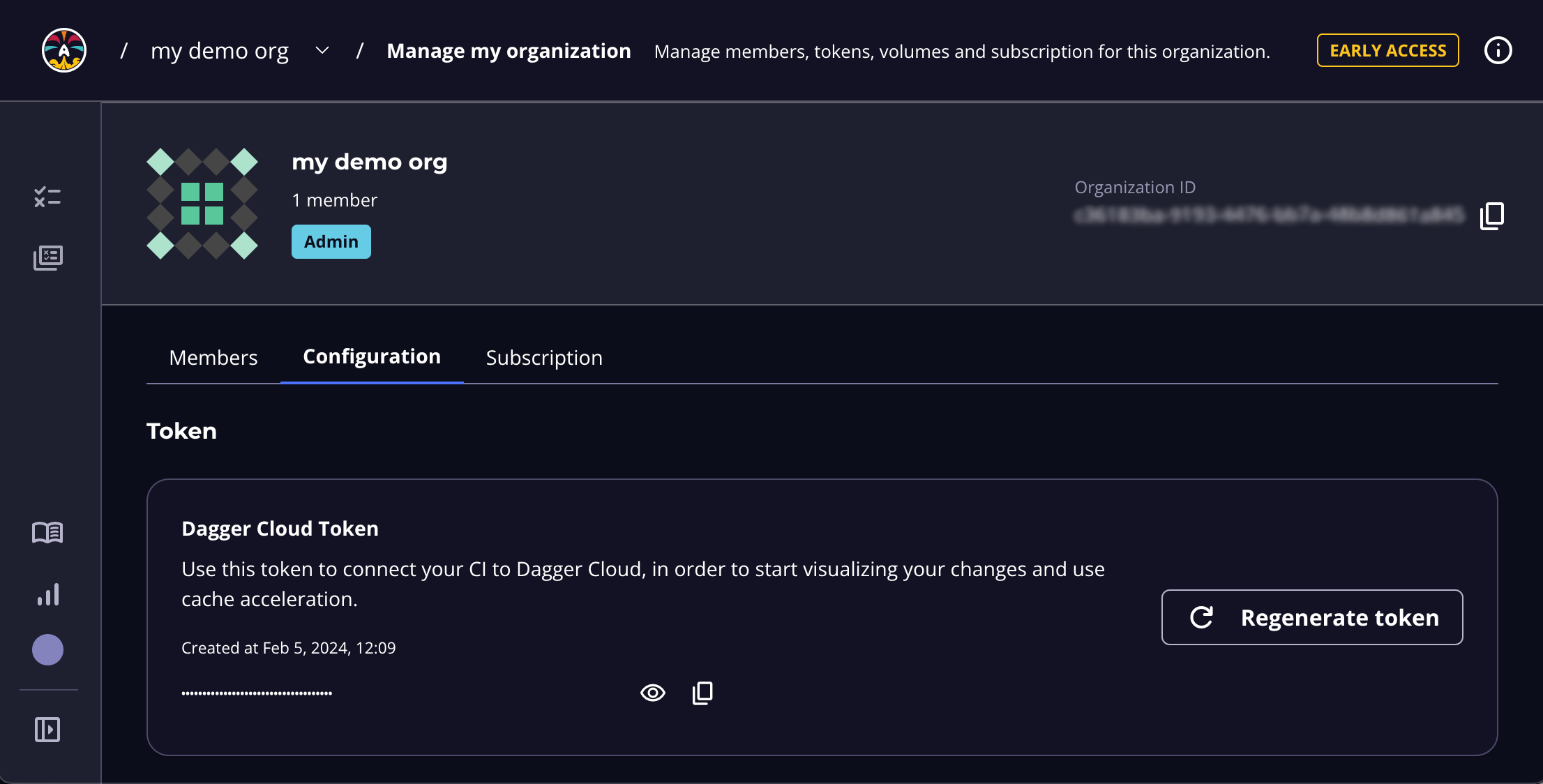

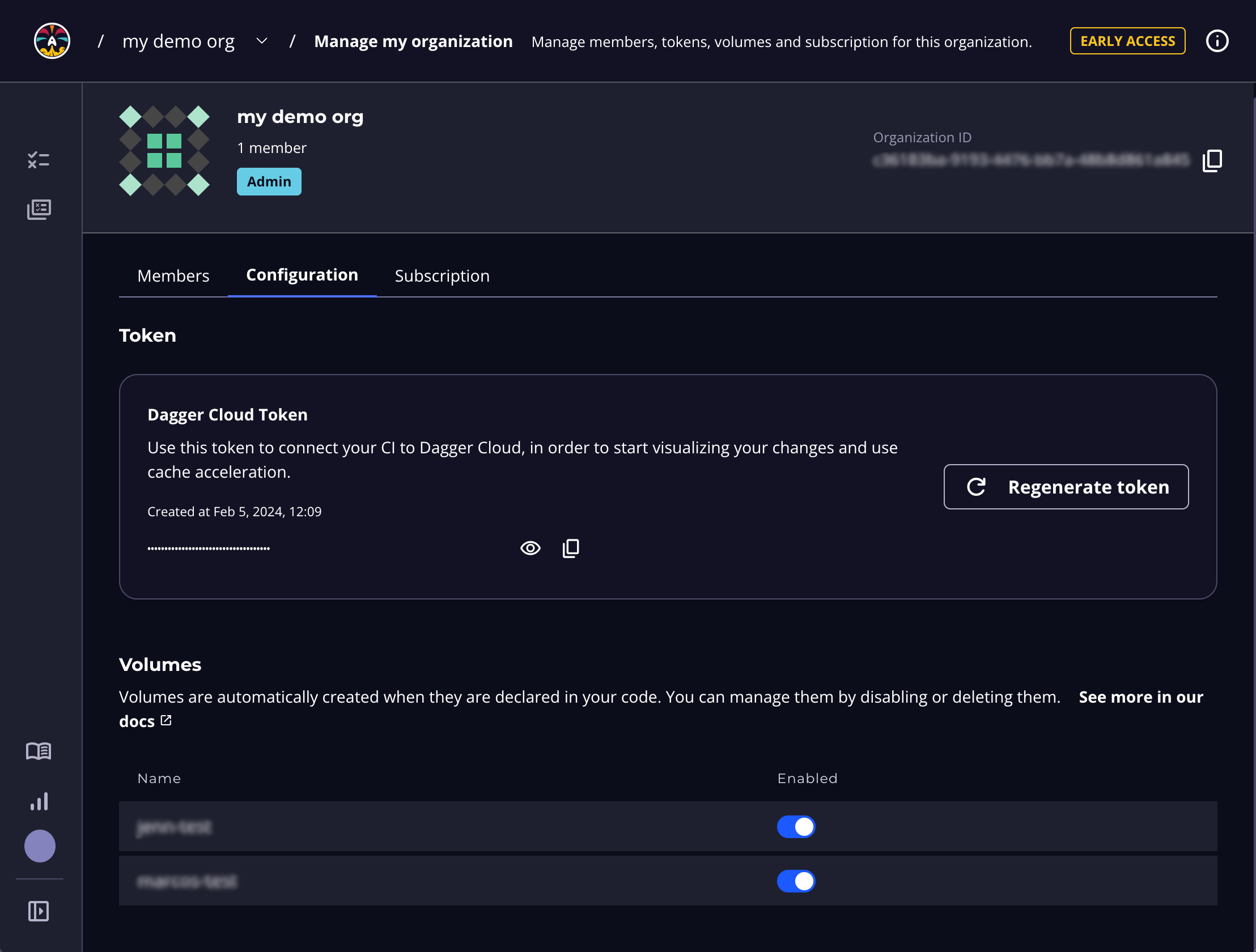

To find your token, browse to the Organization Settings page, by clicking your profile photo in the left sidebar and then clicking Organization Settings. Select the Configuration tab. You can also use this URL pattern: https://dagger.cloud/{Your Org Name}/settings?tab=configuration

If you regenerate your token, you must replace the token wherever you've referenced it. To reduce operational interruptions, only regenerate your token if it has been leaked.

Once you have your token, you can now use it to send telemetry from your CI and development machines.

Local development

You can visualize and debug your local Dagger pipeline runs with Dagger Cloud to identify issues before pushing them to CI.

To configure local development, set your Dagger Cloud token as an environment variable. For example: export DAGGER_CLOUD_TOKEN={your token}

Dagger Cloud uses metadata from your version control system (VCS), such as git, to populate some information in the user interface. Therefore, you should run your Dagger pipelines within a VCS context, such as a local directory linked to a cloned git repository.

In CI

The general procedure to connect Dagger Cloud with a CI provider/CI tool is:

- Add your Dagger Cloud token to your CI workflows.

- Store the Dagger Cloud token as a secret with your CI.

Keep your Dagger Cloud token private

You must store the Dagger Cloud token as a secret (not plaintext) with your CI provider and reference it in your CI’s workflow. Using a secret is recommended to protect your Dagger Cloud account from being used by forks of your project. We provide links in the steps below for configuring secrets with popular CI tools.

- Add the secret to your CI environment as a variable named

DAGGER_CLOUD_TOKEN.

- Store the Dagger Cloud token as a secret with your CI.

- For Team plan subscribers with ephemeral CI runners only: Add a wait step (example below) for the Docker configuration to allow the Dagger Engine to push cache volumes to the experimental Dagger Cloud distributed cache. Subscribers on the Individual plan do not have access to the experimental distributed cache and do not need to add this step.

- If you are using GitHub Actions, install the Dagger Cloud GitHub app for GitHub Checks. This app adds a GitHub Check to your pull requests and links to the run status and pipeline visualization for the commit in Dagger Cloud.

You can use Dagger Cloud whether you're hosting your own CI runners and infrastructure or using hosted/SaaS runners.

When using self-hosted CI runners on AWS infrastructure, NAT Gateways are a common source of unexpected network charges. It's advisable to setup an Amazon S3 Gateway for these cases. Refer to the AWS documentation for detailed information on how to do this.

- GitHub Actions

- GitLab CI

- CircleCI

- Jenkins

- Argo Workflows

-

Create a new secret for your GitHub repository named

DAGGER_CLOUD_TOKEN, and set it to the value of the token obtained in Step 1. Refer to the GitHub documentation on creating repository secrets. -

Update your GitHub Actions workflow and add the secret to your

dagger runstep as an environment variable. The environment variable must be namedDAGGER_CLOUD_TOKENand can be referenced in the workflow using the formatDAGGER_CLOUD_TOKEN: ${{ secrets.DAGGER_CLOUD_TOKEN }}. Refer to the GitHub documentation on using secrets in a workflow. -

For Team plan subscribers with ephemeral CI runners only: Update your GitHub Actions workflow (example below) and adjust the

docker stoptimeout period so that Docker waits longer before killing the Dagger Engine container, to give it more time to push data to the Dagger Cloud cache. Refer to the Docker documentation on thedocker stopcommand. -

Install the Dagger Cloud GitHub App. Once installed, GitHub automatically adds a new check for your GitHub pull requests, with a link to see CI status for each workflow run in Dagger Cloud.

Here is a sample GitHub Actions workflow file with the Dagger Cloud integration highlighted:

name: dagger

on:

push:

branches: [main]

jobs:

build:

name: build

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- uses: actions/setup-node@v3

with:

node-version: 16

- name: Install deps

run: npm ci

- name: Install Dagger CLI

run: cd /usr/local && { curl -L https://dl.dagger.io/dagger/install.sh | sh; cd -; }

- name: Run Dagger pipeline

run: dagger run node index.mjs

env:

DAGGER_CLOUD_TOKEN: ${{ secrets.DAGGER_CLOUD_TOKEN }}

# for ephemeral runners only: override the default docker stop timeout and

# give the Dagger Engine more time to push cache data to Dagger Cloud

- name: Stop Engine

run: docker stop -t 300 $(docker ps --filter name="dagger-engine-*" -q)

if: always()

You can use this file with the starter application and Dagger pipeline in Appendix A to test your Dagger Cloud/GitHub Actions integration.

-

Create a new CI/CD project variable in your GitLab project named

DAGGER_CLOUD_TOKEN, and set it to the value of the token obtained in Step 1. Ensure that you configure the project variable to be masked and protected. Refer to the GitLab documentation on creating CI/CD project variables and CI/CD variable security. -

Update your GitLab CI workflow and add the variable to your CI environment. The environment variable must be named

DAGGER_CLOUD_TOKEN. Refer to the GitLab documentation on using CI/CD variables. -

For Team plan subscribers with ephemeral CI runners only: Update your GitLab CI workflow (example below) and adjust the

docker stoptimeout period so that Docker waits longer before killing the Dagger Engine container, to give it more time to push data to the Dagger Cloud cache. Refer to the Docker documentation on thedocker stopcommand.

Here is a sample GitLab CI workflow with the Dagger Cloud integration highlighted:

.docker:

image: node:16-alpine

services:

- docker:${DOCKER_VERSION}-dind

variables:

DOCKER_HOST: tcp://docker:2376

DOCKER_TLS_VERIFY: '1'

DOCKER_TLS_CERTDIR: '/certs'

DOCKER_CERT_PATH: '/certs/client'

DOCKER_DRIVER: overlay2

DOCKER_VERSION: '20.10.16'

DAGGER_CLOUD_TOKEN: $DAGGER_CLOUD_TOKEN

.dagger:

extends: [.docker]

before_script:

- apk add docker-cli curl

- cd /usr/local && { curl -L https://dl.dagger.io/dagger/install.sh | sh; cd -; }

build:

extends: [.dagger]

script:

- npm ci

- dagger run node index.mjs

# for ephemeral runners only: override the default docker stop timeout and

# give the Dagger Engine more time to push cache data to Dagger Cloud

- docker stop -t 300 $(docker ps --filter name="dagger-engine-*" -q)

-

Create a new environment variable in your CircleCI project named

DAGGER_CLOUD_TOKENand set it to the value of the token obtained in Step 1. Refer to the CircleCI documentation on creating environment variables for a project. -

For Team plan subscribers with ephemeral CI runners only: Update your CircleCI workflow (example below) and adjust the

docker stoptimeout period so that Docker waits longer before killing the Dagger Engine container, to give it more time to push data to the Dagger Cloud cache. Refer to the Docker documentation on thedocker stopcommand. -

For GitHub, GitLab or Atlassian Bitbucket source code repositories only: Update your CircleCI workflow and add the following pipeline values to the CI environment. Refer to the CircleCI documentation on using pipeline values.

GitHub:

environment:

CIRCLE_PIPELINE_NUMBER: << pipeline.number >>GitLab:

environment:

CIRCLE_PIPELINE_NUMBER: << pipeline.number >>

CIRCLE_PIPELINE_TRIGGER_LOGIN: << pipeline.trigger_parameters.gitlab.user_username >>

CIRCLE_PIPELINE_REPO_URL: << pipeline.trigger_parameters.gitlab.repo_url >>

CIRCLE_PIPELINE_REPO_FULL_NAME: << pipeline.trigger_parameters.gitlab.repo_name >>Atlassian BitBucket:

environment:

CIRCLE_PIPELINE_NUMBER: << pipeline.number >>

Here is a sample CircleCI workflow. The DAGGER_CLOUD_TOKEN environment variable will be automatically injected.

yaml

version: 2.1

jobs:

build:

docker:

- image: cimg/node:lts # select appropriate runtime for pipeline

environment: # for GitHub, GitLab, BitBucket only

CIRCLE_PIPELINE_NUMBER: << pipeline.number >> # for GitHub, GitLab, BitBucket only

CIRCLE_PIPELINE_TRIGGER_LOGIN: << pipeline.trigger_parameters.gitlab.user_username >> # for GitLab only

CIRCLE_PIPELINE_REPO_URL: << pipeline.trigger_parameters.gitlab.repo_url >> # for GitLab only

CIRCLE_PIPELINE_REPO_FULL_NAME: << pipeline.trigger_parameters.gitlab.repo_name >> # for GitLab only

steps:

- checkout

- setup_remote_docker

- run:

name: Install deps

command: npm ci

- run:

name: Install Dagger CLI

command: cd /usr/local && { curl -L https://dl.dagger.io/dagger/install.sh | sh; cd -; }

- run:

name: Run Dagger pipeline

command: dagger run node index.mjs

# for ephemeral runners only: override the default docker stop timeout and

# give the Dagger Engine more time to push cache data to Dagger Cloud

- run:

name: Stop Dagger Engine

command: docker stop -t 300 $(docker ps --filter name="dagger-engine-*" -q)

when: always

workflows:

dagger:

jobs:

- build

-

Configure a Jenkins credential named

DAGGER_CLOUD_TOKENand set it to the value of the token obtained in Step 1. Refer to the Jenkins documentation on creating credentials and credential security. -

Update your Jenkins Pipeline and add the variable to the CI environment. The environment variable must be named

DAGGER_CLOUD_TOKENand can be referenced in the Pipeline environment using the formatDAGGER_CLOUD_TOKEN = credentials('DAGGER_CLOUD_TOKEN'). Refer to the Jenkins documentation on handling credentials.

Here is a sample Jenkins Pipeline with the Dagger Cloud integration highlighted:

pipeline {

agent { label 'dagger' }

environment {

DAGGER_CLOUD_TOKEN = credentials('DAGGER_CLOUD_TOKEN')

}

stages {

stage("dagger") {

steps {

sh '''

npm ci

dagger run node index.mjs

'''

}

}

}

}

- This Jenkins Pipeline assumes that the the Dagger CLI is pre-installed on the Jenkins runner(s), together with other required dependencies.

- If you use the same Jenkins server for more than one Dagger Cloud organization, create distinct credentials for each organization and link them to their respective Dagger Cloud tokens.

- Typically, Jenkins servers are non-ephemeral and therefore it is not necessary to adjust the

docker stoptimeout.

-

Create a new Kubernetes secret named

dagger-cloudand set it to the value of the token obtained in Step 1. An example command to achieve this is shown below (replace theTOKENplaceholder with your actual token value). Refer to the Kubernetes documentation on creating secrets.kubectl create secret generic dagger-cloud --from-literal=token=TOKEN -

Update your Argo Workflows specification and add the secret as an environment variable. The environment variable must be named

DAGGER_CLOUD_TOKEN.

Here is a sample Argo Workflows specification with Dagger Cloud integration:

apiVersion: argoproj.io/v1alpha1

kind: Workflow

metadata:

generateName: dagger-in-argo-

spec:

entrypoint: dagger-workflow

volumes:

- name: dagger-conn

hostPath:

path: /var/run/dagger

- name: gomod-cache

persistentVolumeClaim:

claimName: gomod-cache

templates:

- name: dagger-workflow

inputs:

artifacts:

- name: project-source

path: /work

git:

repo: https://github.com/kpenfound/greetings-api.git

revision: "main"

- name: dagger-cli

path: /usr/local/bin

mode: 0755

http:

url: https://github.com/dagger/dagger/releases/download/v0.8.7/dagger_v0.8.7_linux_arm64.tar.gz

container:

image: golang:1.21.0-bookworm

command: ["sh", "-c"]

args: ["dagger run go run ./ci ci"]

workingDir: /work

env:

- name: "_EXPERIMENTAL_DAGGER_RUNNER_HOST"

value: "unix:///var/run/dagger/buildkitd.sock"

- name: "DAGGER_CLOUD_TOKEN"

valueFrom:

secretKeyRef:

name: dagger-cloud

key: token

volumeMounts:

- name: dagger-conn

mountPath: /var/run/dagger

- name: gomod-cache

mountPath: /go/pkg/mod

Learn more about using Dagger with Argo Workflows.

Step 3: Visualize your pipeline runs with Dagger Cloud

At the end of this step, you will have data from one or more CI or local runs available for inspection and debugging in Dagger Cloud.

Local development

Dagger Cloud uses metadata from your version control system (VCS), such as git, to populate some information in the user interface. Therefore, you should run your Dagger pipelines within a VCS context, such as a local directory linked to a cloned git repository.

Before completing these steps, ensure you've added your Dagger Cloud token as an environment variable.

- In your terminal, run your Dagger pipeline. If your environment variable is properly defined, you will see a Dagger Cloud URL printed in the terminal.

- Click on the URL to view your pipeline run in Dagger Cloud.

Here's an example running a Dagger NodeJS pipeline named index.mjs locally after committing a change with git.

git commit -s -m "Updated quickstart link in README"

dagger run node index.mjs

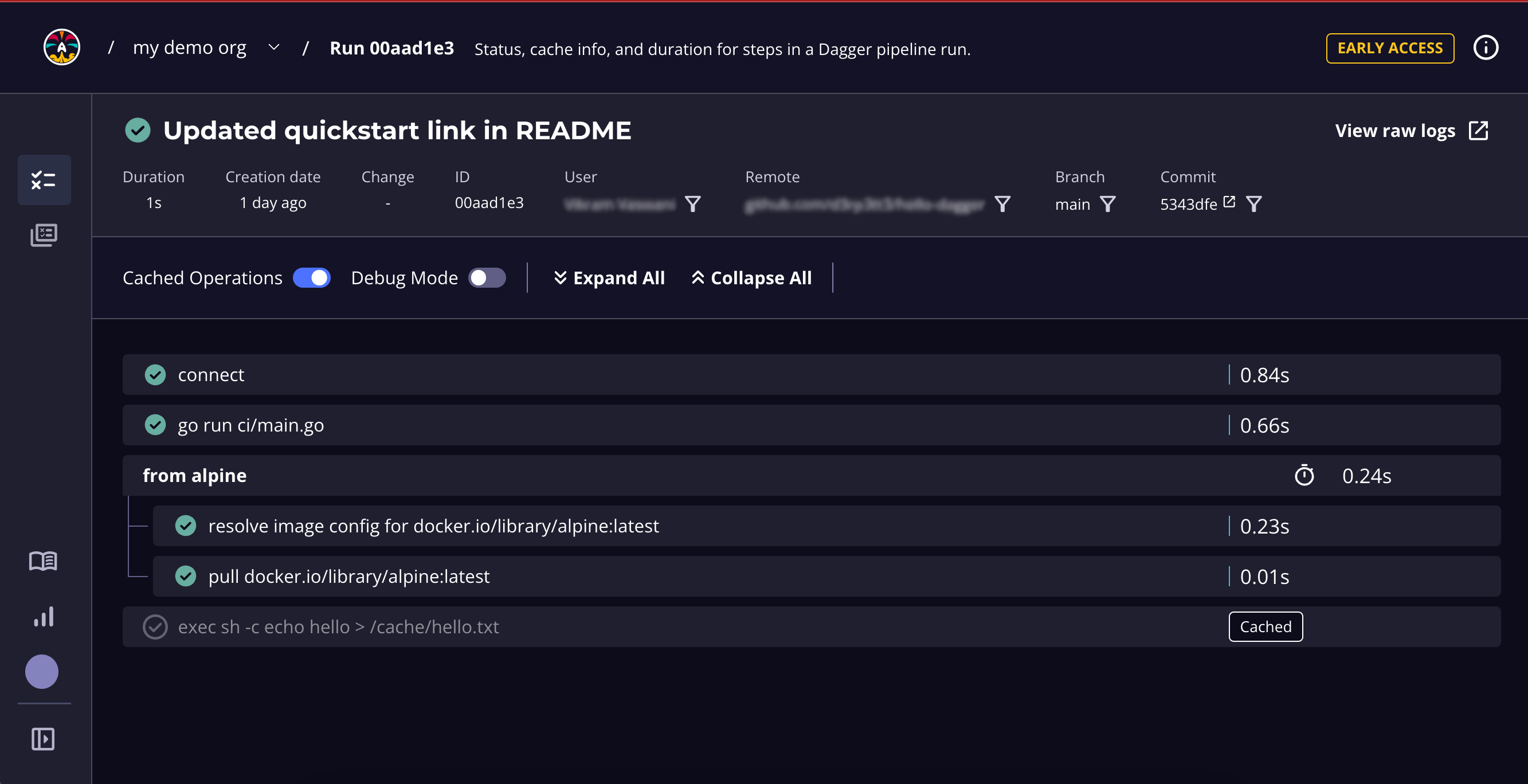

Because we ran this in a git context, the Dagger Cloud UI shows the VCS git metadata associated with the commit.

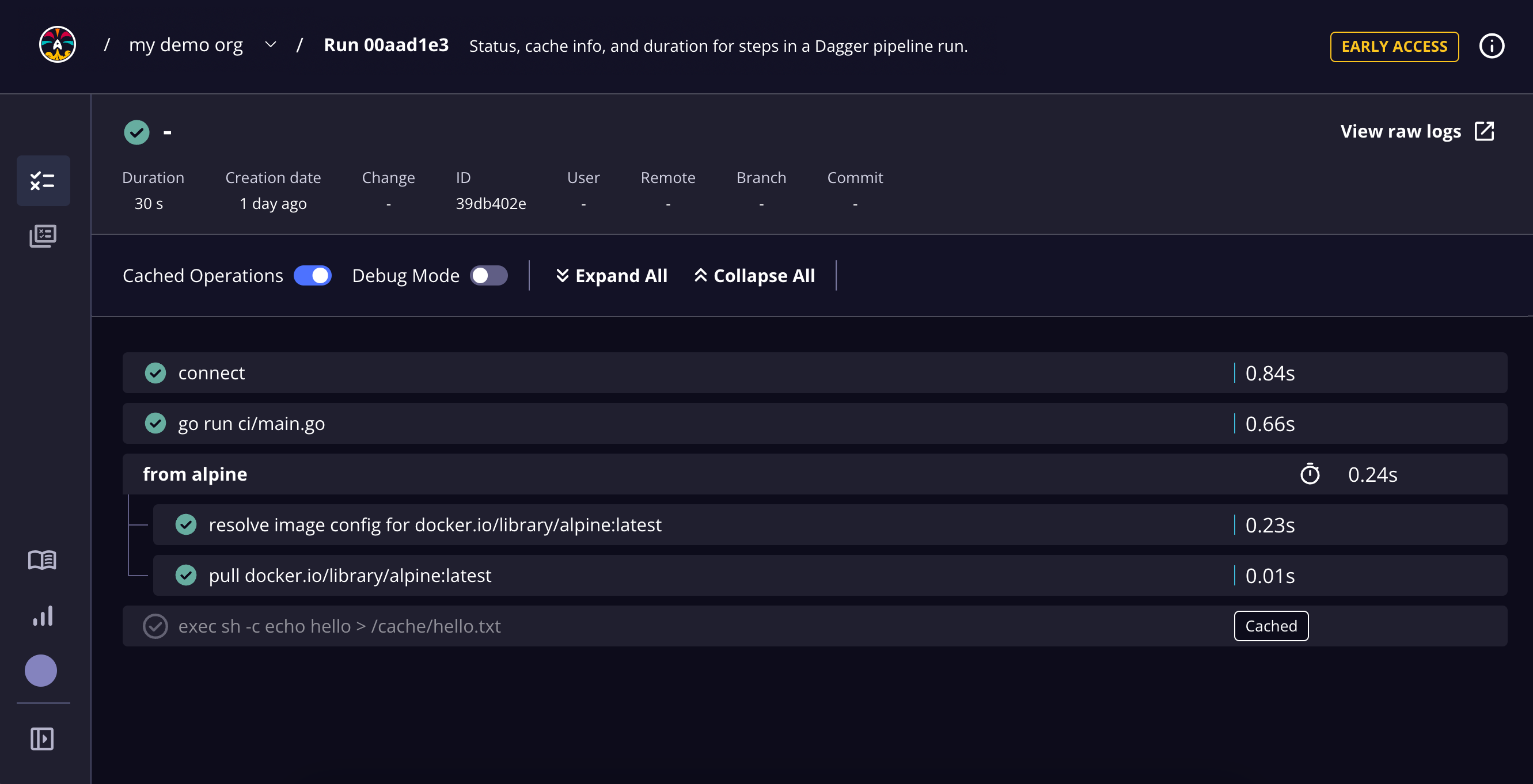

If you run outside of a git context, Dagger Cloud will show the same detailed steps. However, the top-level metadata will not be populated.

To use Dagger Cloud locally with multiple organizations, you must set distinct environment variables for each organization’s Dagger Cloud token. You can find the unique token for an organization in the Organization Settings page.

In CI

Once your CI provider/tool is connected with Dagger Cloud, it’s time to test the integration.

To do this, trigger your CI workflow and Dagger pipeline by pushing a commit or opening a pull request. If you are using the starter application and pipeline from Appendix A, use the following commands:

sed -i 's/Welcome to Dagger/Welcome to Dagger Cloud/g' src/App.tsx

git commit -a -m "Updated welcome message"

git push

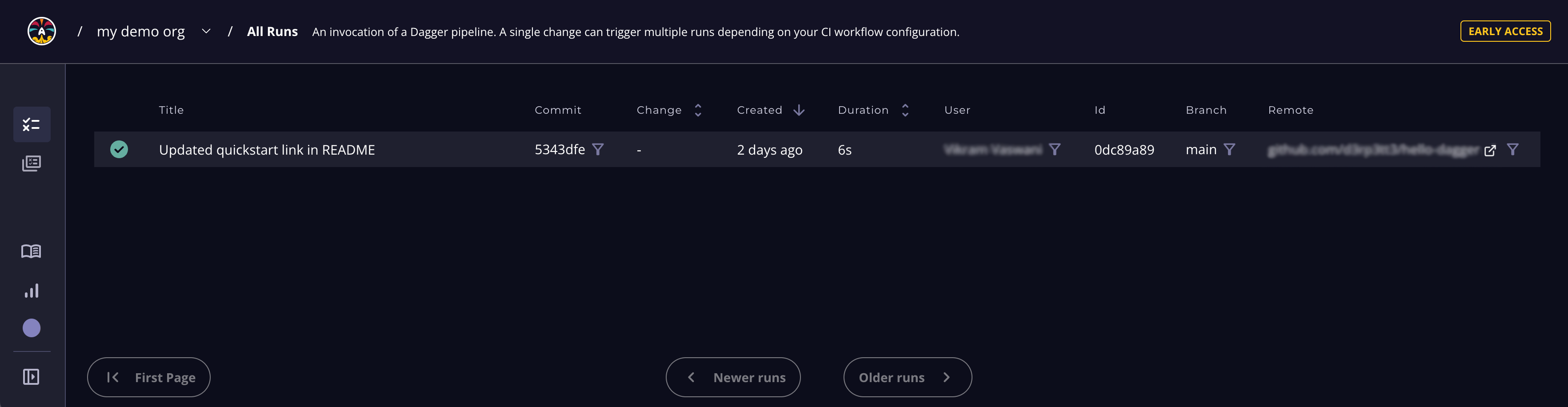

Once your CI workflow begins, navigate to the All Runs page of the Dagger Cloud dashboard. You should see your most recent CI run as the first entry in the table, as shown below:

A run represents one invocation of a Dagger pipeline. It contains detailed information about the steps performed by the pipeline.

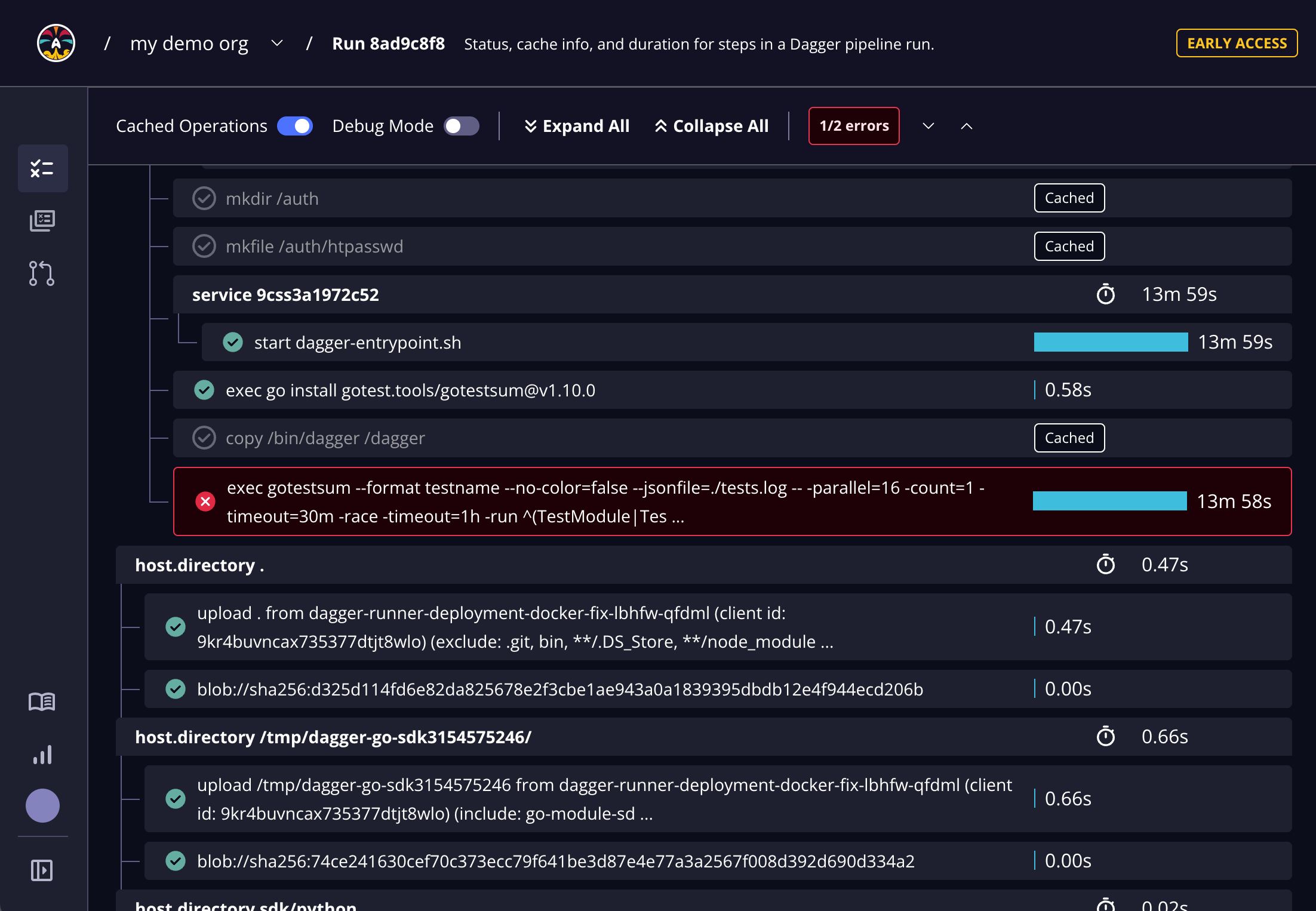

The All Runs page provides an overview of all runs. You can drill down into the details of a specific run by clicking it. This directs you to a run-specific Run Details page, as shown below:

The Run Details page includes detailed status and duration metadata about the pipeline steps. The tree view shows Dagger pipelines and steps within those pipelines. If there are any errors in the run, Dagger Cloud automatically brings you to the first error in the list. You see detailed logs and output of each step, making it easy for you to debug your pipelines and collaborate with your teammates.

Learn more about the Dagger Cloud user interface.

Step 4: Use cache volumes with the experimental Dagger Cloud cache

At the end of this step, you will have integrated Dagger Cloud's experimental distributed cache with your workflows.

Dagger Cloud's distributed caching feature is only available under the Team plan.

One of Dagger's most powerful features is its ability to cache data across pipeline runs. This is especially useful when dealing with package managers such as npm, maven, pip and similar. For these tools to cache properly, they need their own cache data (usually a directory) to be persisted between runs. Since these dependencies are usually locked to specific versions in the application's manifest, re-downloading them on every pipeline run is inefficient and time-consuming.

Dagger comes with built-in support to define one or more such directories as cache volumes and persist their contents across runs. Dagger Cloud enhances caching support significantly and allows cross-host synchronization of cache volumes. This allows multiple machines, including ephemeral runners, to intelligently share a distributed cache.

Dagger Cloud automatically detects and creates cache volumes when they are declared in your code. To see how this works, add a cache volume to your Dagger pipeline and then trigger a run. If you're using the starter application and Dagger pipeline from Appendix A, do this by updating the Dagger pipeline code as shown below (changes are highlighted):

import { connect } from "@dagger.io/dagger"

connect(

async (client) => {

const nodeCache = client.cacheVolume("node")

const source = client

.container()

.from("node:16-slim")

.withDirectory(

"/src",

client.host().directory(".", { exclude: ["node_modules/", "ci/"] }),

)

.withMountedCache("/src/node_modules", nodeCache)

const runner = source.withWorkdir("/src").withExec(["npm", "install"])

const test = runner.withExec(["npm", "test", "--", "--watchAll=false"])

await test

.withExec(["npm", "run", "build"])

.directory("./build")

.export("./build")

const imageRef = await client

.container()

.from("nginx:1.23-alpine")

.withDirectory(

"/usr/share/nginx/html",

client.host().directory("./build"),

)

.publish("ttl.sh/hello-dagger-" + Math.floor(Math.random() * 10000000))

console.log(`Published image to: ${imageRef}`)

},

{ LogOutput: process.stdout },

)

This revised pipeline now uses a cache volume for the application dependencies.

- It uses the client's

cacheVolume()method to initialize a new cache volume namednode. - It uses the

Container.withMountedCache()method to mount this cache volume at the node_modules/ mount point in the container.

Next, trigger your CI workflow by pushing a commit or opening a pull request.

If you've configured cache volumes for the first time in a local development environment, run your pipeline via the Dagger CLI and then run the command docker container stop -t 300 "$(docker container list --filter 'name=^dagger-engine-*' -q)". This step ensures your new cache volumes populate to Dagger Cloud as these are during the engine shutdown phase. You only need to do this the first time you use Dagger Cloud locally with cache volumes or when you add new cache volumes in your Dagger pipeline.

To see your cache volumes, browse to the Organization Settings page of Dagger Cloud dashboard (accessible by clicking your user profile icon in the Dagger Cloud interface) and navigate to the Configuration tab. You should see the newly-created volume listed and enabled.

Your cache volumes will appear in the UI within a few minutes after your pipeline run has completed. If you don't see them in the UI, ensure you've referenced the volumes in your Dagger code and that you've set up your CI or local development workflows to push the cache volumes to Dagger Cloud.

You can create as many volumes as needed and manage them from the Configuration tab of your Dagger Cloud organization page.

Conclusion

This guide introduced you to Dagger Cloud and walked you registering a new organization, integrating Dagger Cloud with your CI provider/tool, and using Dagger Cloud’s visualization and caching features. For more information and technical support, visit the Dagger Cloud reference pages or contact Dagger via the support messenger in Dagger Cloud.

Appendix A: Create a Dagger pipeline

Before you can integrate Dagger Cloud into your CI process, you need a Dagger pipeline and source code for it to interact with.

If you don't have these already, follow the steps below to create an application and its accompanying Dagger pipeline.

This section assumes that you have a Node.js development environment. It uses the starter React application and Dagger pipeline from the Dagger Quickstart in tandem with a GitHub repository. If you wish to use a different application or a different VCS, adapt the steps below accordingly.

-

Begin by cloning the example application's repository:

git clone https://github.com/dagger/hello-dagger.git -

Install the Dagger Node.js SDK:

cd hello-dagger

npm install @dagger.io/dagger --save-dev -

In the application directory, create a file named

index.mjsand add the following code to it.import { connect } from "@dagger.io/dagger"

connect(

async (client) => {

const source = client

.container()

.from("node:16-slim")

.withDirectory(

"/src",

client.host().directory(".", { exclude: ["node_modules/", "ci/"] }),

)

const runner = source.withWorkdir("/src").withExec(["npm", "install"])

const test = runner.withExec(["npm", "test", "--", "--watchAll=false"])

await test

.withExec(["npm", "run", "build"])

.directory("./build")

.export("./build")

const imageRef = await client

.container()

.from("nginx:1.23-alpine")

.withDirectory(

"/usr/share/nginx/html",

client.host().directory("./build"),

)

.publish("ttl.sh/hello-dagger-" + Math.floor(Math.random() * 10000000))

console.log(`Published image to: ${imageRef}`)

},

{ LogOutput: process.stdout },

)This Dagger pipeline uses the Dagger Node.js SDK to test, build and publish a containerized version of the application to a public registry.

infoExplaining the details of how this pipeline works is outside the scope of this guide; however, you can find a detailed explanation and equivalent pipeline code for Go and Python in the Dagger Quickstart.

-

Commit the changes:

git add .

git commit -a -m "Added Dagger pipeline" -

Create a private repository in your GitHub account and push the changes to it:

git remote remove origin

gh auth login

gh repo create hello-dagger --push --source . --private