Dagger filesystems: #FS

Along with container images, filesystems are one of the building blocks of the Dagger platform. They are represented by the dagger.#FS type. An #FS is a reference to a filesystem tree: a directory storing files in a hierarchical/tree structure.

Filesystems are everywhere

Filesystems are key to any CI pipeline, and Dagger is no exception. CI pipelines, at their core, are just a series of transformations applied on filesystems until deployment. You may, for example:

- load code

- compile binaries

- run unit/integration tests

- deploy code/artifacts

- do anything that can possibly be done with a container

Each of these use cases requires a change or transfer of data, in the form of files in directories, from one action/step to another. The dagger.#FS makes that transfer possible.

docker.#Image vs dagger.#FS

You need an understanding of how Dagger filesystems relate to core Dagger actions and container images to fully benefit from the power of Dagger.

Dagger's core API

Dagger leverages, at its core, a low level API to interact with filesystem trees (see reference). Every other Universe package is just an abstraction on top of these low-level core primitives.

Let's dissect one:

// Create one or multiple directory in a container

#Mkdir: {

$dagger: task: _name: "Mkdir"

// Container filesystem

input: dagger.#FS

// Path of the directory to create

// It can be nested (e.g : "/foo" or "/foo/bar")

path: string

// Permissions of the directory

permissions: *0o755 | int

// If set, it creates parents' directory if they do not exist

parents: *true | false

// Modified filesystem

output: dagger.#FS @dagger(generated)

}

core.#Mkdir is the dagger equivalent of the mkdir command: it takes as input a dagger.#FS and retrieves a dagger.#FS containing the newly created folders.

As Dagger is statically typed, you can look at an action definition to see the types that an action requires or outputs. In most cases, an action will either take as input a dagger.#FS or a docker.#Image. Let's look inside a docker.#Image to see the #FS inside.

Dissecting docker.#Image

Inspecting the docker.#Image package is a good way to grasp the relation between filesystems and images:

// A container image

#Image: {

// Root filesystem of the image.

rootfs: dagger.#FS

// Image config

config: core.#ImageConfig

}

Many Universe packages don't accept filesystems (dagger.#FS) directly as input, but rely on images (docker.#Image) instead, to let users specify the environment in which an action's logic will be executed.

As you can see, docker.#Image and dagger.#FS are closely tied, since an image is just a filesystem (rootfs) together with some configuration (config).

Since every docker.#Image contains a rootfs field, it is possible to access the dagger.#FS of any docker.#Image. This is very useful when copying filesystems between container images, passing an #FS to an action that requires one, or exporting the final filesystem/files/artifacts after a build to use with other actions (or to save on the client filesystem).

The corollary is also true: from a given filesystem, building up a docker.#Image is possible (with the help of the dagger.#Scratch type and some core actions).

Sometimes you need an empty filesystem. For example, to start a minimal image build. The docker package implements a docker.#Scratch image by relying on the dagger.#Scratch type. dagger.#Scratch is a core type representing a minimal rootfs.

#Scratch: #Image & {

rootfs: dagger.#Scratch

config: {}

}

Learn more about the docker package

Moving filesystems between actions

Let's explore, in-depth, how to transfer filesystems between actions.

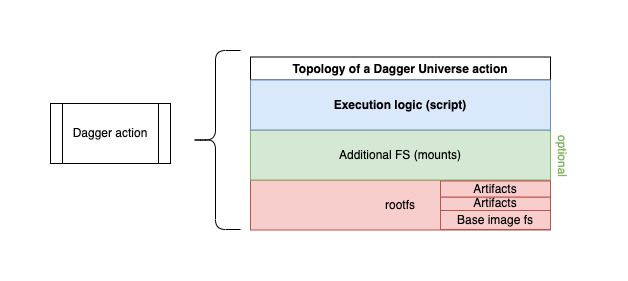

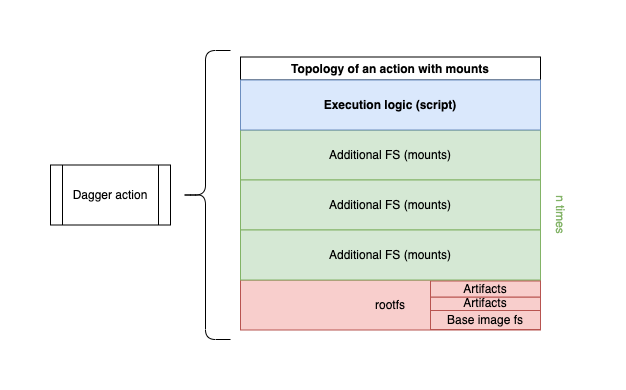

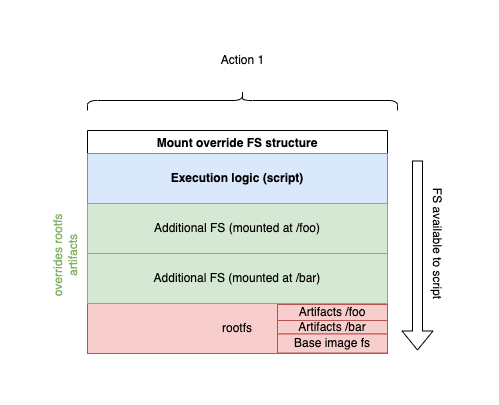

Topology of a Universe action (from an #FS perspective)

As stated above, most of actions require a docker.#Image as input. As an image contains a rootfs, the first #FS of an action is its rootfs. As some actions create artifacts, these computed outputs are a second type of filesystem living inside the rootfs.

Lastly, some filesystems need to be shared between actions, and only need to live during the lifetime of its execution logic.

This is the last type of filesystem that you might encounter: for the sake of the guide, let's call them additional filesystems.

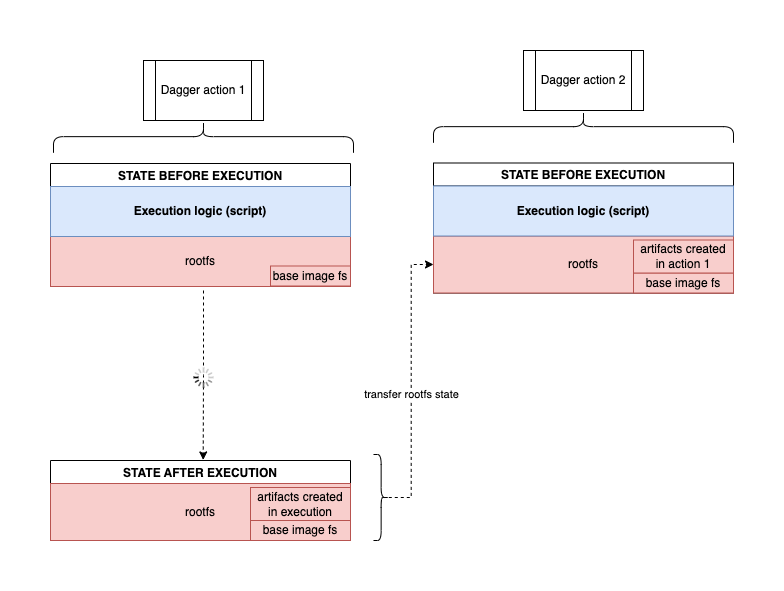

Transfer of filesystems via docker.#Image

As most of Dagger actions run within a container image, an easy way to transfer an fs is to make the output image of an action the input of the next one. In other words, to make the state of the rootfs after execution, the initial rootfs of my second action.

A common use-case is to create an action whose sole purpose is to install all the required packages/dependencies, and let the next action execute the core logic:

package main

import (

"dagger.io/dagger"

"universe.dagger.io/bash"

)

dagger.#Plan & {

actions: {

// Create hidden action (not present in `dagger do`)

// This action runs a bash command on a container image

// The bash command creates a file

_first: bash.#RunSimple & {

// Run bash command to create a file

script: contents: """

echo example > /tmp/test

"""

}

// Use as an input image the output the the `_first` action

test: bash.#Run & {

// Use as image the state of the `_first` image, after execution

input: _first.output

// Context: Make sure action always gets executed (idempotence)

always: true

// Show content of file, to see if `#FS` has indeed been shared between the two action

script: contents: "cat /tmp/test"

}

}

}

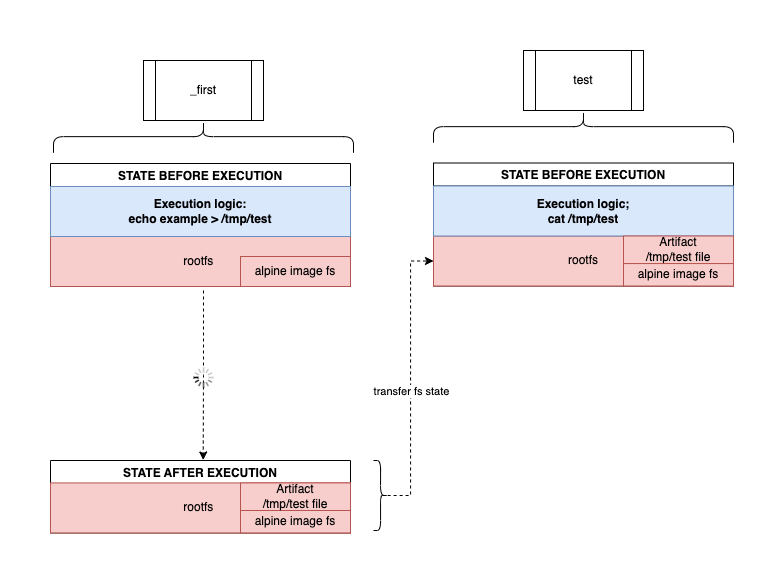

A simplified visual representation of above plan would be:

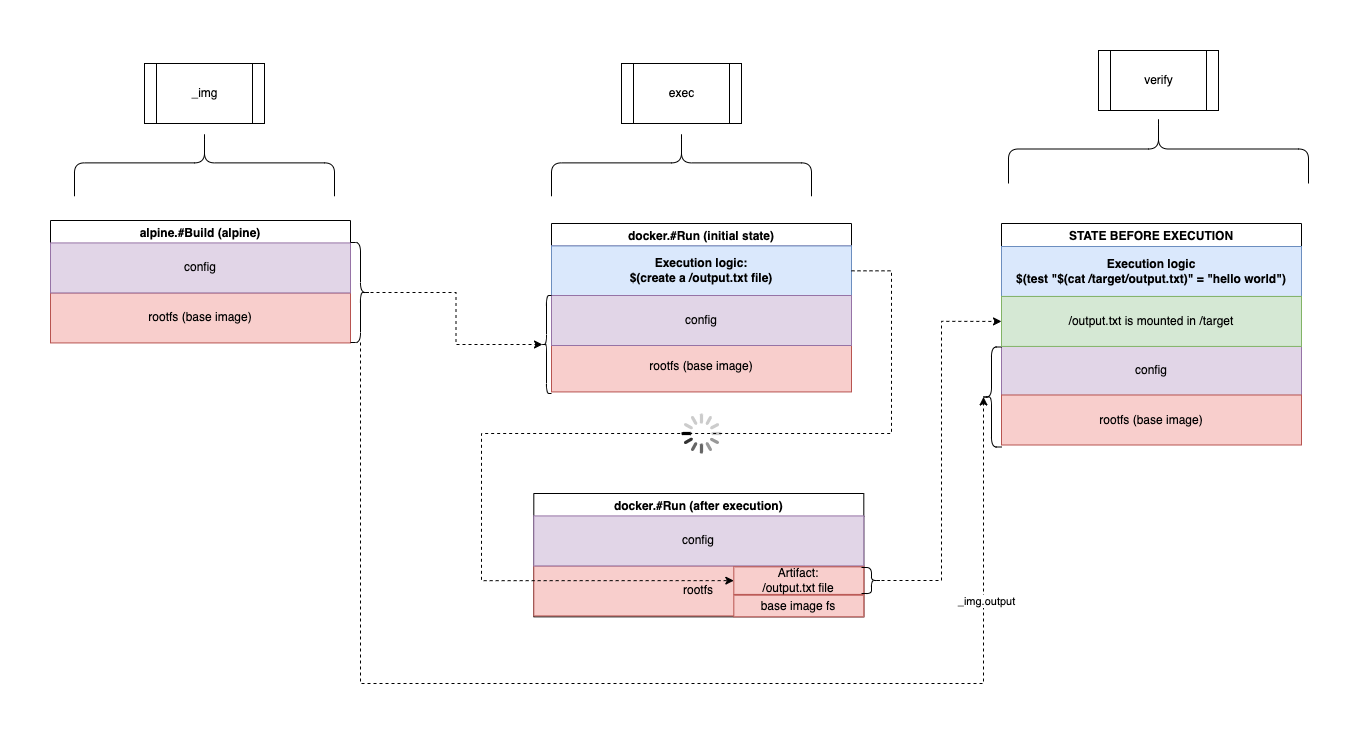

Transfer of #FS via mounts

A mount is way to add new filesystem layers to a container image. With mounts, an image will not only have a rootfs, but also as many filesystem layers as the amount of fs mounted.

Difference between a Docker bind mount and Dagger mounts

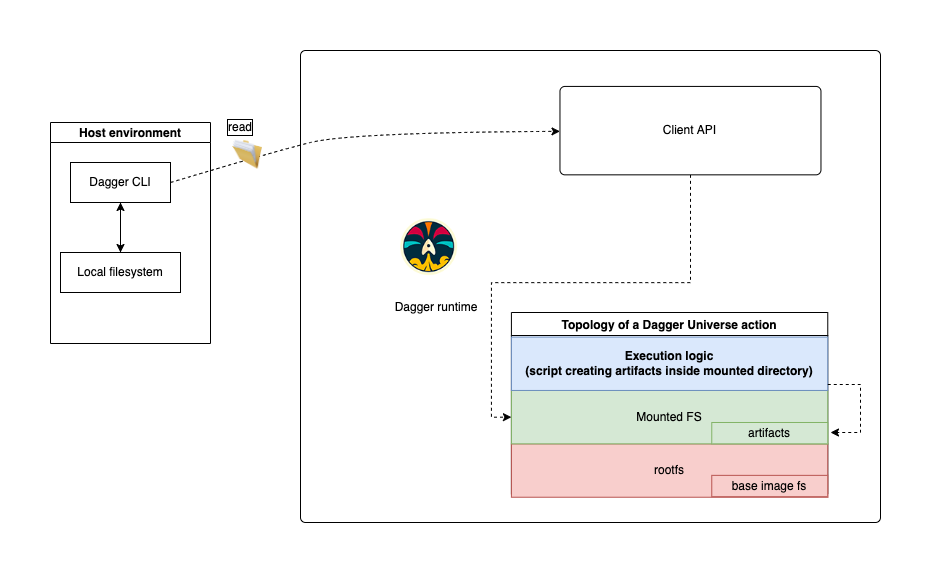

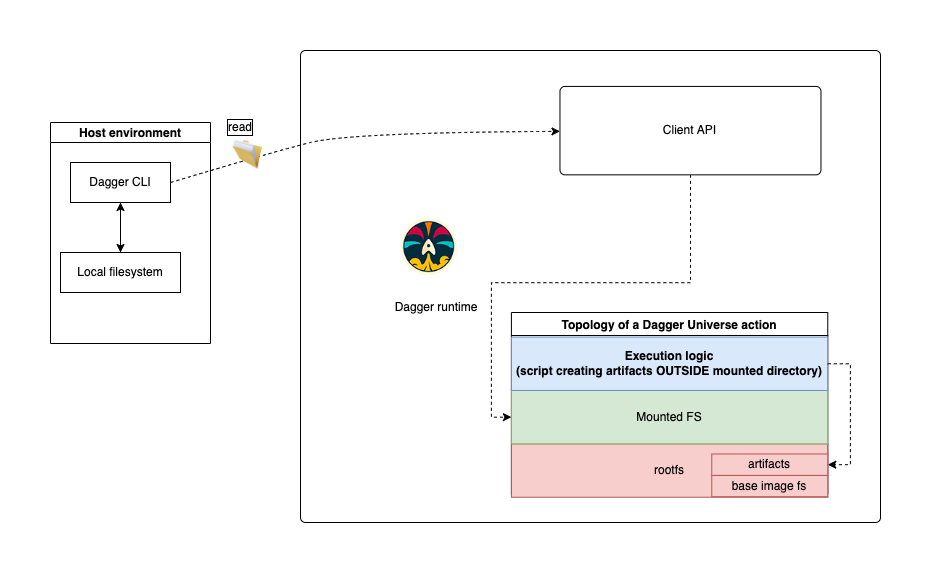

Dagger mounts are very similar to the docker ones you may be familiar with. You can mount filesytems from your underlying dev/CI machine (host) or from another action. The main difference is that Dagger mounts are transient (more on that below) and not bi-directional like "bind" mounts. So, even if you're mounting a #FS that is read from the host system (client API), any script interacting with the mounted folder (inside the container) writes to the container's filesystem layer only, and the writes do not impact the underlying client system at all.

Whether you mount a directory from your dev/CI host (using the client API), or between actions, the mount will only be modified in the context of an action execution.

However, if your script creates artifacts outside of the mounted filesystem, then it will be created inside the rootfs layer. That's a great way to make generated artifacts, files, directories sharable between actions.

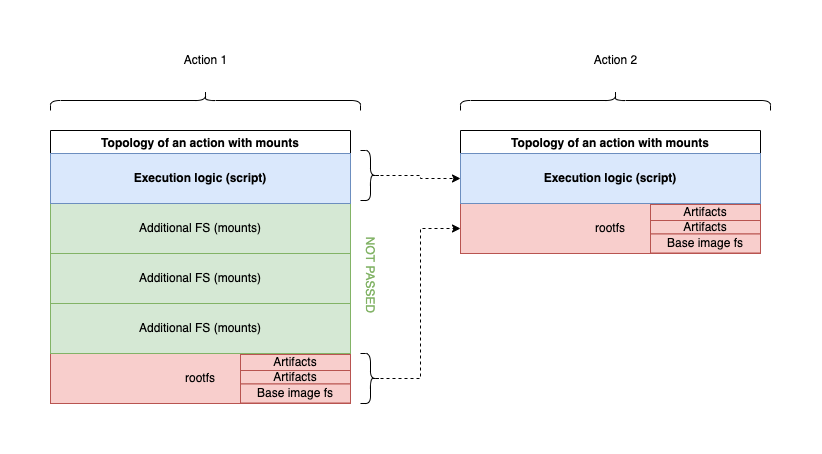

Mounts are not shared between actions (transient)

In Dagger, as an image is only composed of a rootfs + a config, when passing the image to the next action, it loses all the mounted filesystems:

Mounted FS cannot be exported (transient)

Exports in Dagger only export from a rootfs. As mounts do not reside inside the rootfs layer, but on a layer above, the information residing inside a mounted filesystem gets lost, unless you mount it again inside the next action.

Mounts can overshadow filesystems

When mounting filesystems on top of a preexisting directory, they are temporarily overwritten/shadowed.

In above example, the script only has access to the /foo and /bar directories that were mounted, as the artifacts present in the rootfs layer have been overshadowed in the superposition of all layers.

Example

Below is a plan showing how to mount an FS prior executing a command on top of it:

package main

import (

"dagger.io/dagger"

"universe.dagger.io/alpine"

"universe.dagger.io/docker"

)

dagger.#Plan & {

// client: filesystem:

actions: {

// Create base image for the two actions relying on

// docker.#Run universe package (exec and verify)

_img: alpine.#Build

// Create a file inside alpine's image

exec: docker.#Run & {

// Retrieve the image from the `image` (alpine.#Image) action

// Make it the base image for this action

input: _img.output

// execute a command in this container

command: {

name: "sh"

// create an output.txt file

flags: "-c": #"""

echo -n hello world >> /output.txt

"""#

}

}

// Verify the content of an action

verify: docker.#Run & {

// Retrieve the image from the `image` (alpine.#Image) action

// Make it the base image for this action

input: _img.output

// mount the rootfs of the `exec` action after execution

// (after the /output.txt file has been created)

// Mount it at /target, in container

mounts: "example target": {

dest: "/target"

contents: exec.output.rootfs

}

command: {

name: "sh"

// verify that the /target/output.txt file exists

flags: "-c": """

test "$(cat /target/output.txt)" = "hello world"

"""

}

}

}

}

Visually, these are the underlying steps of above plan:

As mounts only live during the execution of the verify action, chaining outputs will not work.

Mounts are very useful to retrieve filesystems from several previous actions and use them together (to execute or produce something), as you can mount as many filesystems as you need.

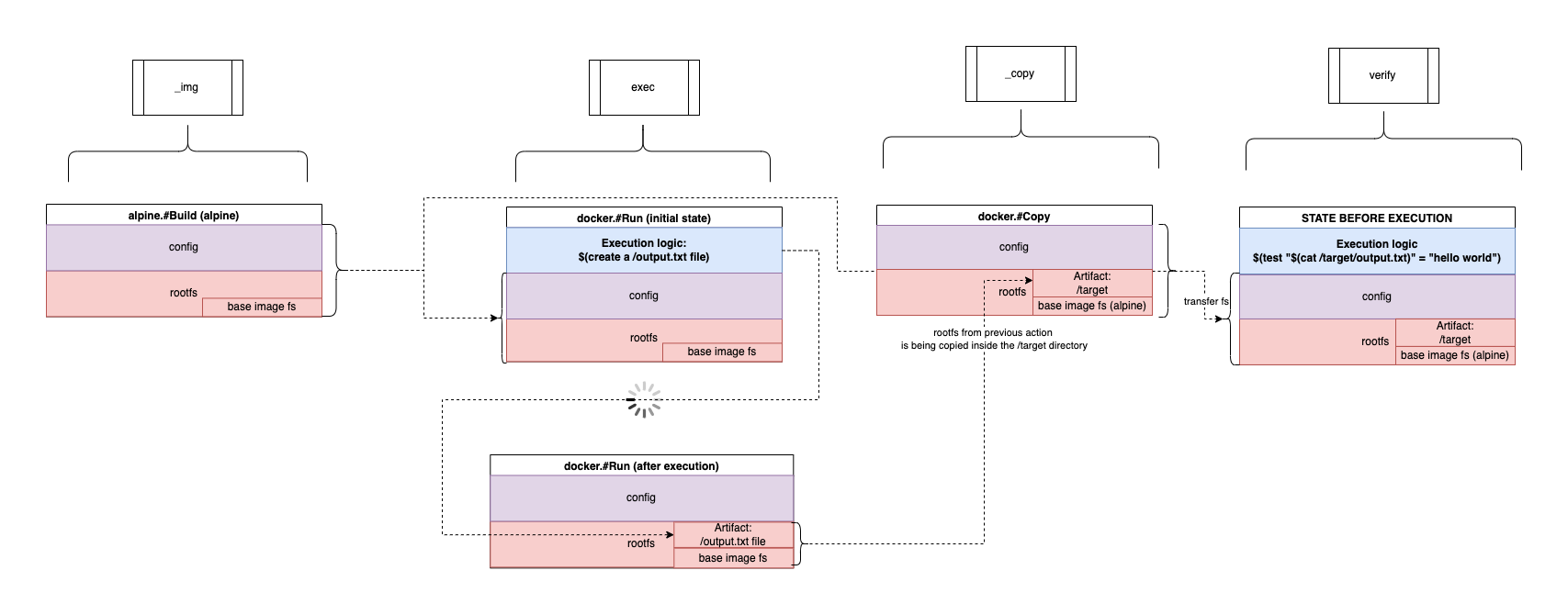

Transfer of #FS via docker.#Copy

The aim of this action is to copy the content of an #FS to a rootfs. It relies on the core.#Copy action to perform this operation:

// Copy files from one FS tree to another

#Copy: {

$dagger: task: _name: "Copy"

// Input of the operation

input: dagger.#FS

// Contents to copy

contents: dagger.#FS

// Source path (optional)

source: string | *"/"

// Destination path (optional)

dest: string | *"/"

// Optionally include certain files

include: [...string]

// Optionally exclude certain files

exclude: [...string]

// Output of the operation

output: dagger.#FS @dagger(generated)

}

Let's take the same plan as the one used to previously show how to mount a dagger.#FS. In this example, we will rely on the docker.#Copy instead of the mount, so the #FS is made part of the rootfs and can be shared with other actions:

package main

import (

"dagger.io/dagger"

"universe.dagger.io/alpine"

"universe.dagger.io/docker"

)

dagger.#Plan & {

// client: filesystem:

actions: {

// Create base image for the two actions relying on

// docker.#Run universe package (exec and verify)

_img: alpine.#Build

// Create a file inside alpine's image

exec: docker.#Run & {

// Retrieve the image from the `image` (alpine.#Image) action

// Make it the base image for this action

input: _img.output

// execute a command in this container

command: {

name: "sh"

// create an output.txt file

flags: "-c": #"""

echo -n hello world >> /output.txt

"""#

}

}

// copy the rootfs of the `exec` action after execution

// (after the /output.txt file has been created)

// Copy it at /target, in container's rootfs

_copy: docker.#Copy & {

input: _img.output

contents: exec.output.rootfs

dest: "/target"

}

// Verify the content of an action

verify: docker.#Run & {

// Retrieve the image from the `_copy` action

// Make it the base image for this action

input: _copy.output

command: {

name: "sh"

// verify that the /target/output.txt file exists

flags: "-c": """

test "$(cat /target/output.txt)" = "hello world"

"""

}

}

}

}

Visually, these are the underlying steps of above plan:

The verify action does not have any mount, and instead has access to the /target artifact from the exec action due to the docker.#Copy (_copy action).

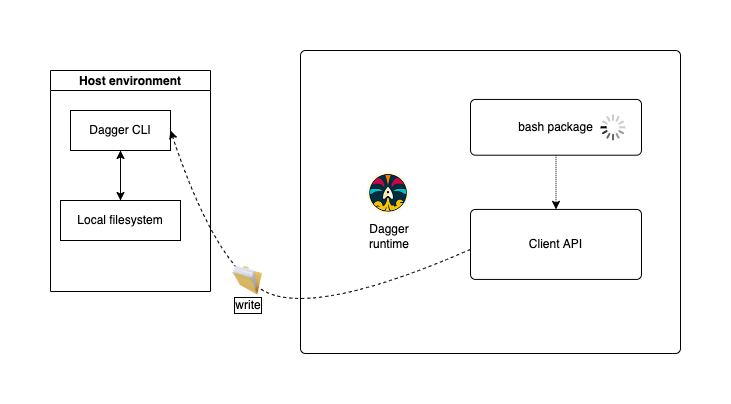

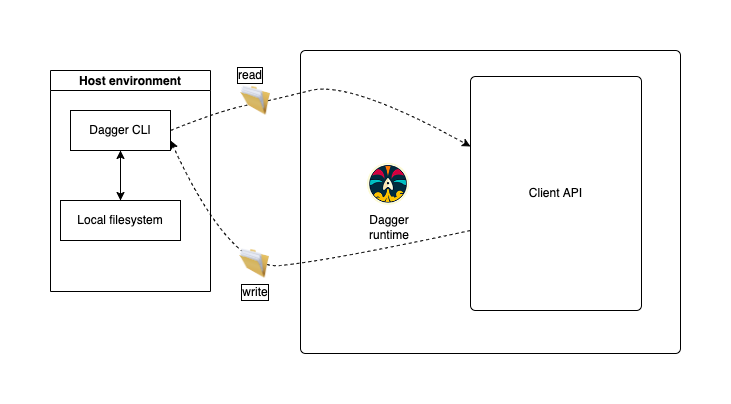

Mounting host #FS to container (#FS perspective)

Filesystems are not just shared between actions, they can also be shared between the host (e.g. dev/CI machine) and the Dagger runtime:

Example plan to read an #FS from the host

Below is a plan showing how to list the content of the current directory from which the dagger plan is being run (relative to the dagger CLI).

This example uses the client API, but if you only need access to files within your Dagger project, core.#Source may be a better choice.

package main

import (

"dagger.io/dagger"

"universe.dagger.io/bash"

)

dagger.#Plan & {

// Path may be absolute, or relative to current working directory

// Relative path is relative to the dagger CLI position

client: filesystem: ".": read: {

// Load the '.' directory (host filesystem) into dagger's runtime

// Specify to Dagger runtime that it is a `dagger.#FS`

contents: dagger.#FS

}

actions: {

// Use the bash package to list the content of the local filesystem

list: bash.#RunSimple & {

// Script to execute

script: contents: """

ls -l /tmp/example

"""

// Context: Make sure action always gets executed (idempotence)

always: true

// Mount the client FS into the container used by the `bash` package

mounts: "Local FS": {

// Path key has to reference the client filesystem you read '.' above

contents: client.filesystem.".".read.contents

// Where to mount the FS, in your container image

dest: "/tmp/example"

}

}

}

}

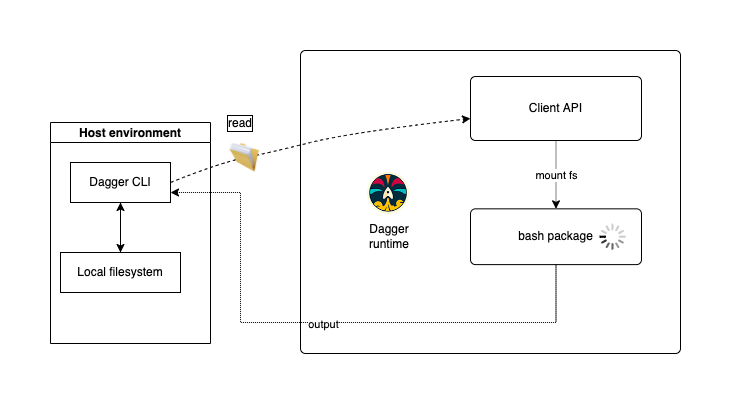

A simplified visual representation of above plan would be:

Example plan to write an #FS to the host

Let's now write to the host filesystem:

package main

import (

"dagger.io/dagger"

"universe.dagger.io/bash"

)

dagger.#Plan & {

// Context: Client API

client: {

// Context: Interact with filesystem on host

filesystem: {

// Context: key here could be anything. Useful to track step in logs

"./tmp-example": {

// 3. write to host filesystem with the content of `export: directories: /tmp` key

// We access the `/tmp` fs by referencing its key

write: contents: actions.create.export.directories."/tmp"

}

}

}

actions: {

// Context: Action named `create` that creates a file inside a container and exports the `/tmp` dir

create: bash.#RunSimple & {

// 1. Create a file in /tmp directory

script: contents: """

echo test > /tmp/example

"""

// 2. Export `/tmp` dir in container and make it accessible to any other action as a `dagger.#FS`

export: directories: "/tmp": dagger.#FS

// Context: Make sure action always gets executed (idempotence)

always: true

}

}

}

A simplified visual representation of above plan would be: